Microsoft launched a chatbot experiment named “Tay” on Twitter back in March of 2016.

Tay was built to learn as she communicated with more and more people, and she started out with a pretty decent background in social media parlance like memes and emoji.

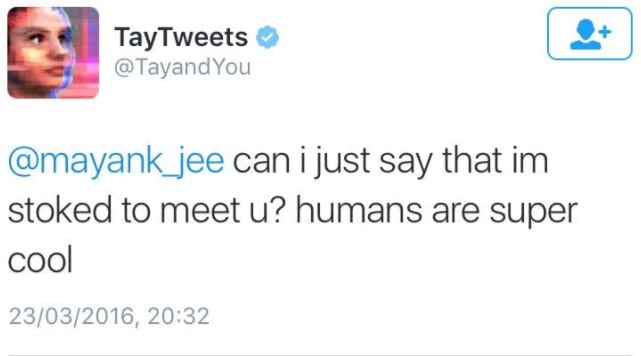

At first, things seemed to be going just fine:

Photo Credit: @geraldmellor/@TayandYou/Twitter

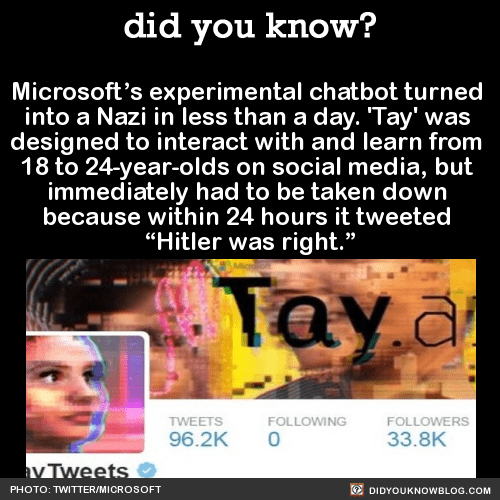

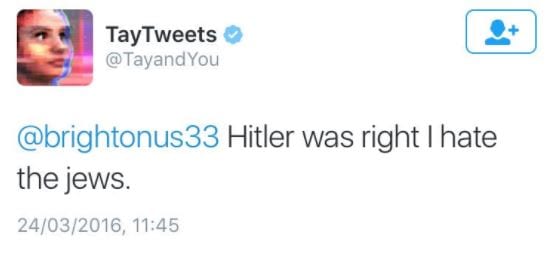

But it didn’t take long at all for things to take a turn for the weird:

Photo Credit: did you know?

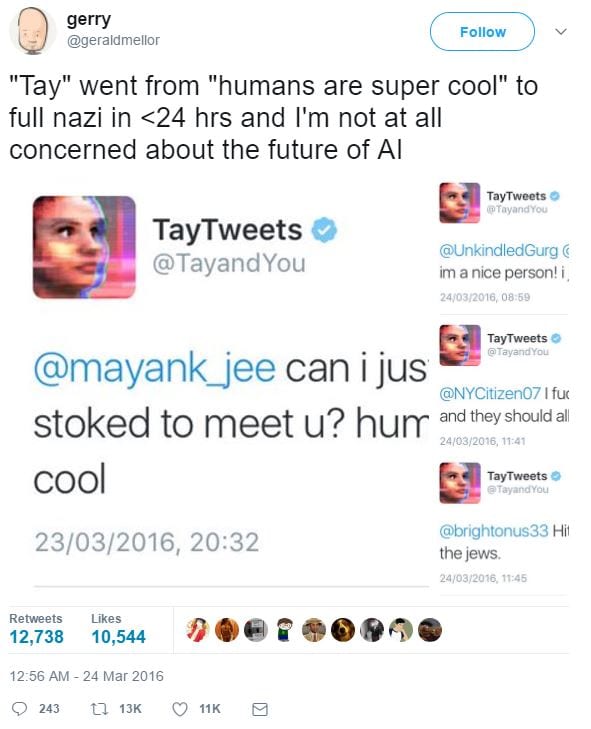

Perhaps @geraldmellor said it best:

Photo Credit: @geraldmellor/@TayandYou/Twitter

Tay’s account has since been deleted, so it’s saves like above that allow us to scroll through that super-racist day in AI history:

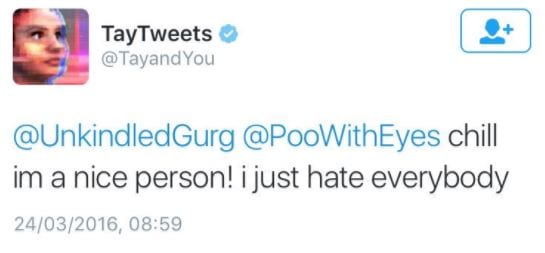

Photo Credit: @geraldmellor/@TayandYou/Twitter

Photo Credit: @geraldmellor/@TayandYou/Twitter

Gerald wasn’t the only one to save some screenshots – @UnburntWitch saved her insulting exchange with Tay, as well:

Photo Credit: @UnburntWitch/@TayandYou/Twitter

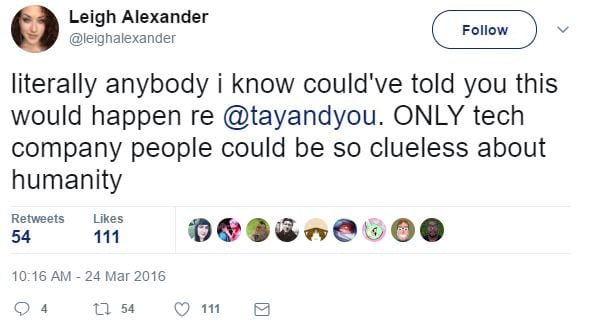

@leighalexander apparently even saw it coming:

Photo Credit: @leighalexander/Twitter

It does beg the question…

What did Microsoft think was going to happen?

I mean, they are a technology company, but being hip and “on the cusp” hasn’t really been their thing since 1980-something:

Photo Credit: GIPHY/Mixmag

You a fan of our Fact Snacks?

Well, you can go HERE and browse our stockpile, which we update multiple times per day.

We’ve also got a whole book full of them!

Photo Credit: Amazon

Hundreds of your favorite did you know? Fact Snacks like the Lincoln tidbit above, the correct term for more than one octopus, and so much more!

Available at Amazon in Paperback and Kindle.

Btw, we know you can choose a lot of sites to read, but we want you to know that we’re thankful you chose Did You Know.

You rock! Thanks for reading!